As user research is rebuilt into product practices, all its parts — not just the interviewing — will eventually be re-integrated.

The product team’s mean time to evidence (MTTE) is drastically reducing: “talking to users” used to mean “getting out of the building” quite literally, or setting up a study and all of the trappings that entailed. Now it’s as easy as a calendar link in an email or info banner, or a request to the Research Operations team.

As MTTE falls, the dominant project model of user research is starting to stretch apart and unbundle. New practices emerge. Teams are moving from research projects to continuous discovery.

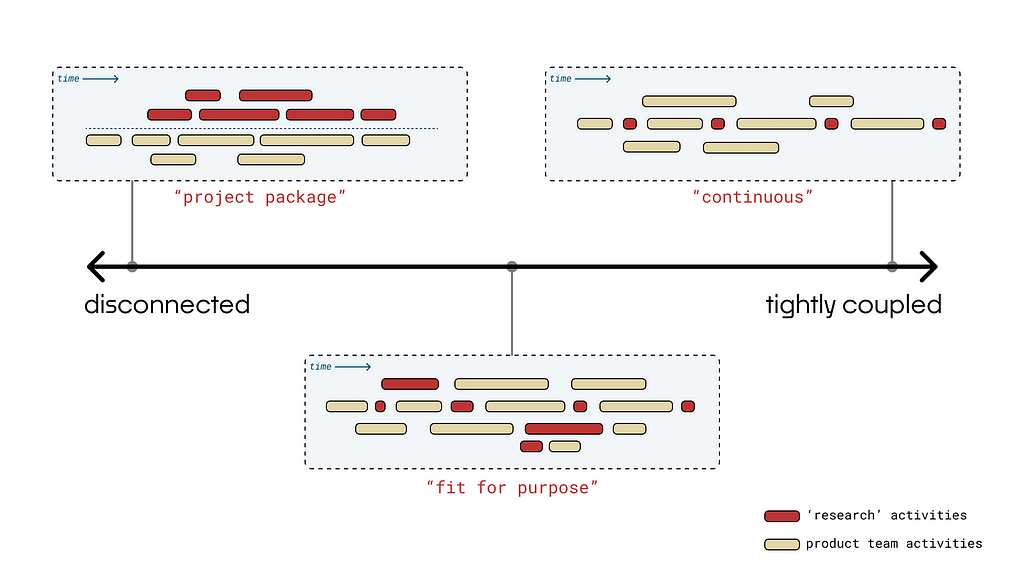

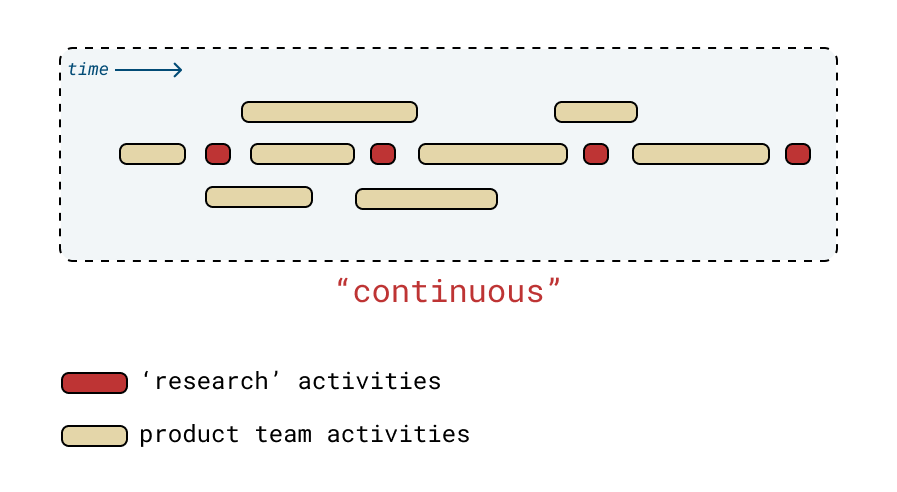

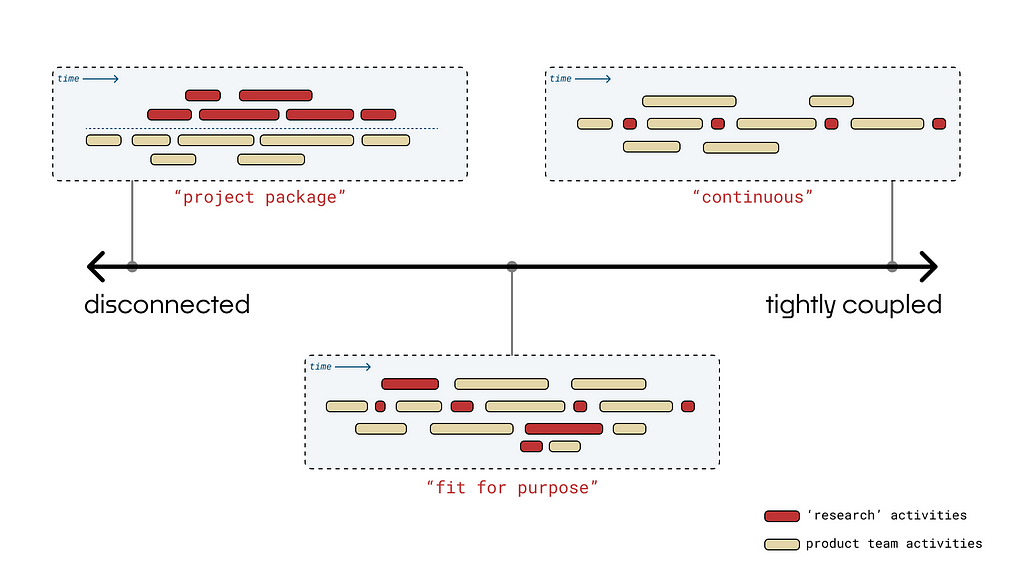

We’re seeing a hard pendulum shift from research-led projects to product-team-led discovery: in Figure 1, from the left over to the right.

Basic customer conversations and user interviewing activities are exiting the realm of the sacred. The activities associated with user research are unbundling and re-integrating into product work.

The product team itself is now in charge of its own sensing mechanism — a qualitative environment sampling tool — and carries the expectation of wielding it well. It comes with big questions and no user manual. Where do we use it? At what sampling rate? And how?

Projects (Too Big?)

Our starting point in this shift is research-led projects and studies. They’re our original, time-honored mode of engaging in the learning and contextual awareness that drive good design — whether technical, hardware, industrial, or software.

Rooted in academic foundations, projects are complete packages — they begin with a question and end with action, a recommendation, or at least a well-developed report.

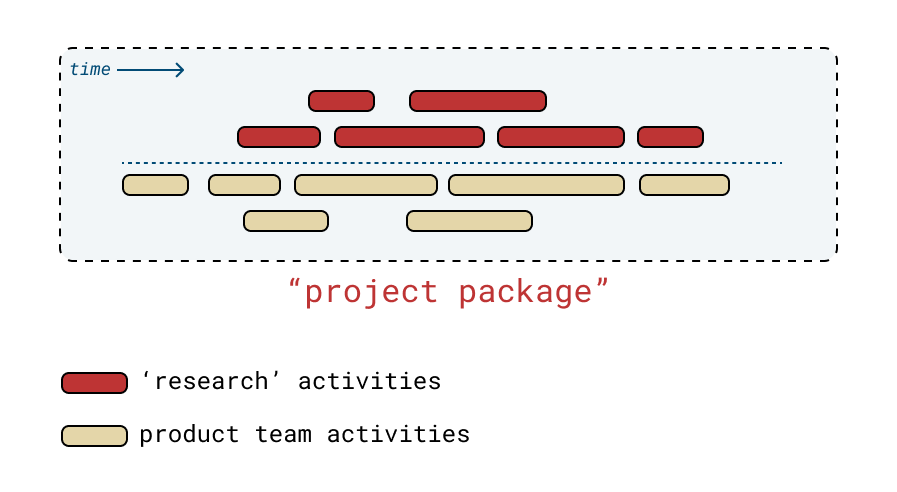

Projects have the sometimes-advantage of decoupling from immediate and ongoing work cycles, as illustrated in Figure 2. There is space for critical thinking and reaching strong conclusions inherent in the project model, separate from the pace of regular product activities.

There is a clear process within each project package. That process allows for crucial activities like analyzing, synthesizing, reframing, and modeling that force us to make meaningful conclusions and understand the wider implications of what we’ve uncovered.

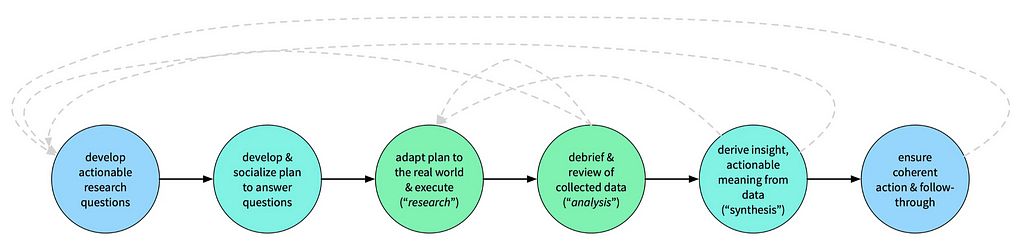

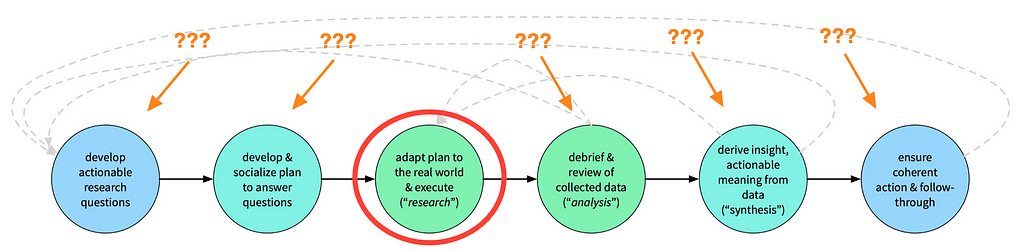

Figure 3 below shows one view of that process. At over 7 years old, I think it’s still a valid idea to help guide how productive learning can unfold. As we experience the unbundling of the traditional mode of research, all of these pieces should, in some form, exist in our new form of work.

Six simple stages belie a well-developed, complex set of activities in each phase. Breaking apart a project into its various component activities (see the RSF project builder for a draggable template) shows the full range of considerations in what might appear to be a simple, straightforward “research project.”

There is a lot of work under the hood — often unseen and unarticulated — crucial for turning raw signal into clear implication. The project mode is also teachable: examining and transmitting the underlying skills is easy when they fit together in a self-contained bundle of work.

But research-led projects are not always the right tool for the job. Like any method or tool, it has to fit its context of use. There is a dogmatic danger in fighting for this project-bound, “researchers own the researching” mode, without respect for the larger reality of the software development context.

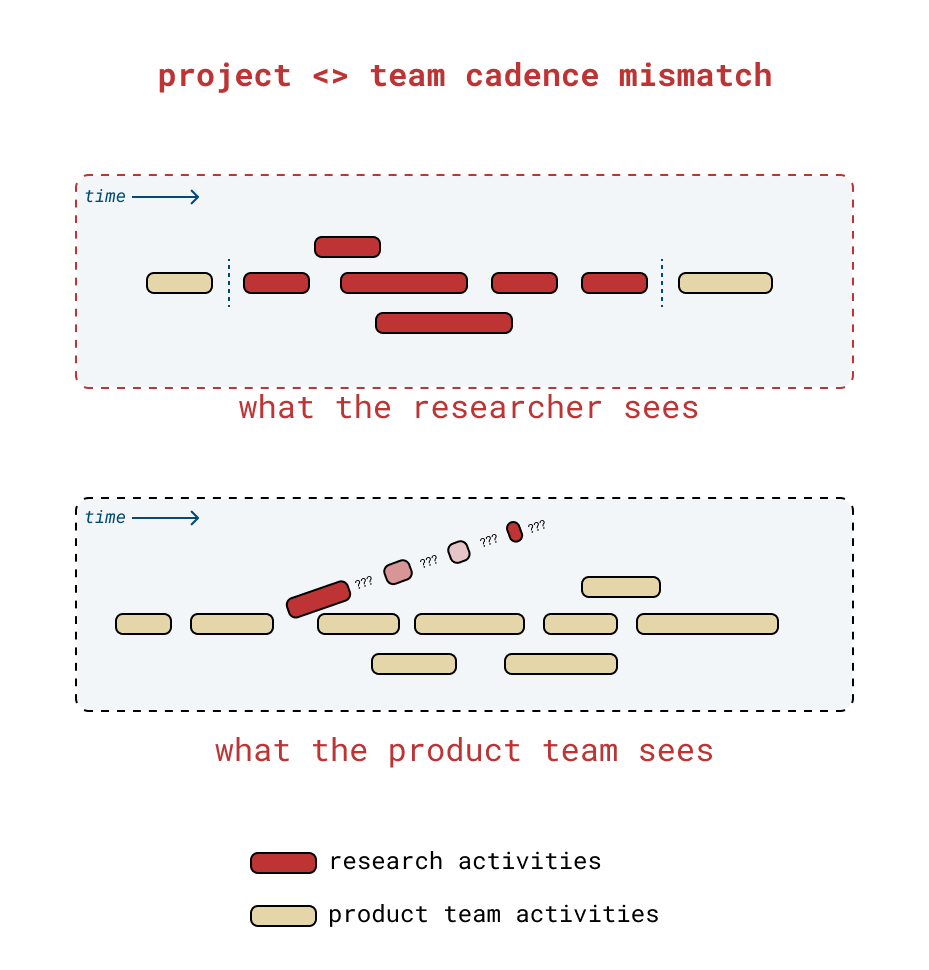

When research is tightly bundled and self-contained, it can become a black box to a team whose reality is moving at a different speed. Cadence mismatch, shown in Figure 4, is a recipe for disillusioned researchers, annoyed product teams, and general misalignment.

The team puts a question in the box, an answer comes back, and by that time the team is already gone — they found out long ago there were more important questions to attend to. As the team moves faster than the research, especially teams who have already internalized a “build to learn” mindset, research as it was traditionally conducted doesn’t make sense.

How do research activities work when the team moves so fast that fundamental assumptions may shift from week to week, or the value they seek to deliver changes at monthly intervals?

Continuous (Too Small?)

One answer, of course, is moving to cadenced and continuous discovery.

We know that product work is accelerating. The demand — as ever — is to do more with less. To take care of talking to users and learning and continuously improving the product and acting strategically and thinking big but also being attached to the details and shipping value every week.

It is not an easy situation; it’s also the current-state truth for many organizations.

The success of Teresa Torres’ Continuous Discovery Habits lies in the fact that she recognized:

- This is the product reality,

- There is still a crucial need for user evidence in decision-making, and

- Many product teams don’t have the time for research projects, or the luxury of engaged, embedded researchers to solve this problem for them.

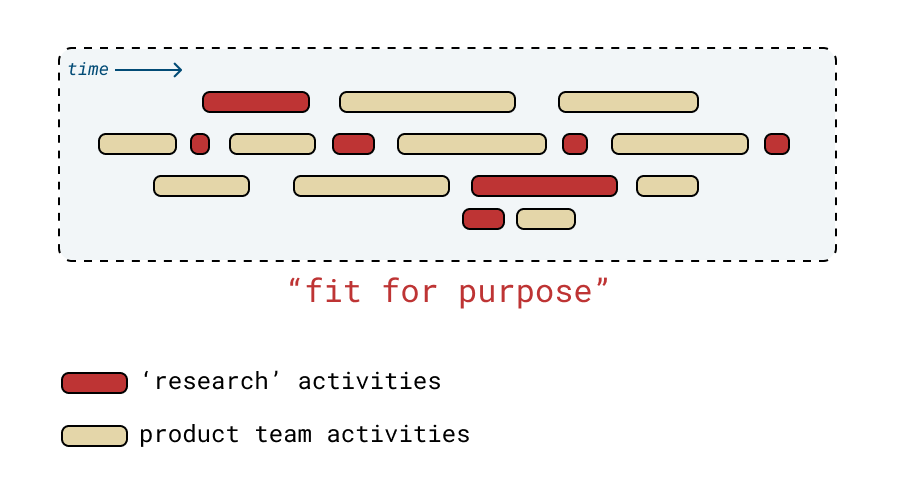

Enter product-led discovery, shown here in Figure 5:

A hallmark of this learning mode is building contextual awareness in a way that’s designed to first fit into the product team’s work cycles. That means, primarily, weekly customer conversations.

While the shift occurs, the user research community has largely argued more about putting this genie back in its bottle (“ should we democratize user research?”) than solving for the real, pressing, and stressing needs of the product teams our work purports to serve.

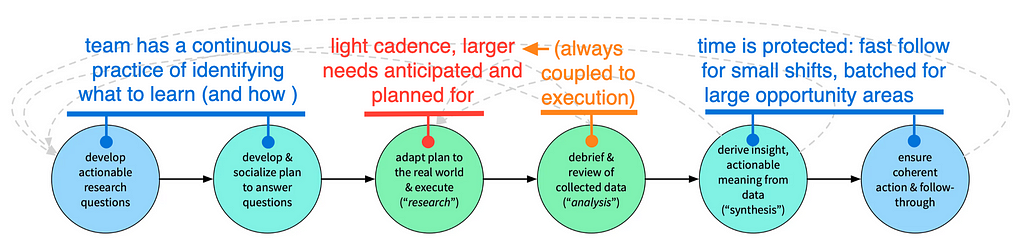

Instead of amplifying and augmenting this more continuous mode, by figuring out how all the other pieces of a healthy learning practice can live in this new model (see Figure 6), old-guard inertia keeps our conversations rooted in the past, preventing us from shaping a more effective future.

There are special flavors of critical thinking baked into research projects beyond the execution itself: triangulating what to learn, how to gather evidence, assessing validity of our raw input, modeling and reframing what we’ve learned to highlight implications and opportunities. And, as product teams take the reins — whether eagerly or reluctantly — these supporting activities are the first things to fall through the cracks.

There are tools to help us here, of course. Teresa Torres’ Opportunity Solution Tree is one such tool for critical thinking. And it has pre-requisites to ensure it’s used appropriately:

- a clear outcome or objective,

- prior story-based customer interviews to understand the customer journey,

- and, ideally, a visible map of the customer journey.

When teams pick up CD, these tools for thinking critically and guiding the inquiry are the first to go out the door. We’re already introducing a whole new class of activity that takes time, practice, effort, and reflection to improve.

The problem with continuous-cadenced research isn’t that product teams are speaking to users: that is the stopgap solution. The problem is that without intentional practice and reflection, or coaching and guidance, it is difficult for product teams to work their way into a fit-for-purpose mode of inquiry. The habits that underlie a healthy continuous discovery collapse: without care, CD is reduced to a shorthand for “talking to customers every week” regardless of the larger context.

There is a parallel here: where Agile was once an adaptive means of helping teams advance their work and thinking, it is now, paradoxically, a rigid and dogmatic approach to working, liberally smeared over the full range of our development contexts as sprint cycles and story points. Interpreting the Agile Manifesto and building a healthy way of working that suits our team is hard. Working in two-week sprints and saying we are “agile” is not.

Projects may think too big. Continuous discovery may think too small. How do we help our teams apply the right mode of learning at the right time, and avoid an oversimplified continuous-discoverification of everything?

Purpose Built (Just Right?)

Product teams work in different modes and need to learn at different levels of scale — each has different kinds of research needs. Some of these product needs will be better served by projects, others by a strict continuous cadence, and most, I argue, by a fit-for-purpose middle ground.

Research projects, done properly, set the foundation of a strategy that cannot be acted upon in similiarly-large increments: they open the door for smaller-sized work.

Continuous discovery, done properly, spawns sub-investigations, spurs on novel ideas and questions, and opens avenues that don’t simply fit into the pipeline of “what to build next”: they open the door for larger-sized work.

One product team, through months and years, will likely move between the two extremes. Somehow, then, we need to find a balance that’s fit for purpose, as shown in Figure 7.

There is a future where these diagrams don’t exist: there’s no such thing as a ‘research’ activity in red, separate from product activities. To make the right decisions we need to gather the right kind of evidence. We need the right kinds of thinking tools, frames, and models to help us assess and adapt this work to the situation at hand.

As projects unbundle and the interviewing is the first piece that product teams pick up, we need to recognize all the other parts of the process that get swept under the rug. In Figure 8, all of these aspects labeled in blue are where new practices will emerge.

The higher-order critical thinking loops built into research projects have also come unbundled — but they aren’t as visible and aren’t as easily picked up and acted on.

Once our product teams have their sensing mechanism up and running, they’ll have to approach new questions:

- how do we make sure we’re getting viable and valuable signal for the work at hand?

- how do we build the right kind of to drive effective decisions?

- how do we transform our signal into the right conclusions?

This is the crux of the challenge. What comes next is re-integrating the research function’s invisible responsibility and privilege — critical thinking detached from the manic pace of continuous product development — into the product team’s awareness.

Getting to “Just Right”

Some of our teams are stuck in the “old world” where researchers are dropped in like a pure service provider: take an input, do some work, produce an output. And then they disappear. Projects without a feedback loop for updating and incorporating new evidence into shared understanding and direction beg the need for short-cycle insights and integration.

In the absence of time or support, other teams have latched on to the idea of “all-continuous all-the-time” where everything must be answered in cadenced user interviews or small-cycle experiments. If it can’t, it won’t be addressed. Lack of time for depth spurs the eventual need for long-cycle insights and space that the regular crush of product activities does not afford.

In truth, either starting point is fine. Most important is the product team’s desire to learn, and an openness to reflecting and improving on how things are working. That’s how we find our way to good fit.

The first step is helping our product teams see that they can take charge of their learning: they can design the work to suit their needs, so long as they understand what good looks like.

Some questions deserve high-quality contextual insight, others deserve basic contextual awareness, while others are reversible, trivial, or non-harmful enough to explore by building first.

The new question: What tools and systems does the team need to make safe and effective decisions, over and over again, and keep getting better at it?

Researchers, I think, are the best suited to approach this question, by helping product teams navigate, rebuild, and rebundle the tools for healthy decisions throughout their ongoing and upcoming work.

In this world, every mention of democratization is a distraction. The foundational activities of user research are no longer the sole provenance of user researchers. But — for now — researchers are the ones who understand what good looks like.

For ongoing commentary, essay alerts, and fuzzy sketches about how we make software products, check out Loops and Cycles, a weekly newsletter.

This essay is part of a larger exploration into the ongoing evolution of research. Originally published at https://www.davesresearch.com on June 4, 2024.

Unbundling user research was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

Leave a Reply