TLDR: Achieving AI’s full potential relies on trustworthiness, necessitating a focus on transparency, fairness, and security. To tackle challenges like bias and privacy concerns, a holistic approach must be adopted across the entire AI lifecycle. The Z-Inspection method emerges as a valuable tool, providing a practical framework to assess and bolster AI trustworthiness by bridging ethical, technical, and legal considerations. By embracing this comprehensive approach, we pave the way for AI systems that inspire confidence and drive responsible innovations.

What does trustworthy AI mean? Policymakers and AI developers worldwide have poured millions into addressing this question. The driving force behind this effort is the belief that societies can only realize AI’s full potential if trust is built into its development, deployment, and usage. For instance, if physicians and patients do not trust an AI-based system’s diagnoses or treatment recommendations, they are unlikely to follow these recommendations, even if they could improve patient well-being. Likewise, if the general public distrusts autonomous vehicles, these will not replace traditional, manually operated cars.

However, many current AI systems are found vulnerable to imperceptible attacks, biased against underrepresented groups, lacking in user privacy protection. Such breaches can range from biased treatment by automated systems in hiring and loan decisions to the loss of human life.

These issues diminish user experience and corrupt trust in all AI systems.

Traditionally, AI practitioners, including researchers, developers, and decision-makers, have prioritized system performance (i.e., accuracy) as the primary metric in their workflows. However, this metric alone is insufficient to reflect the trustworthiness of AI systems. Improving trustworthiness requires considering various factors beyond performance, such as robustness, algorithmic fairness, explainability, and transparency.

Academic research on AI trustworthiness has primarily focused on the algorithmic properties of models. However, advancing algorithmic research alone is not sufficient to create trustworthy AI products. From an industrial perspective, the lifecycle of an AI product spans several stages, each requiring specific efforts to enhance trustworthiness. These stages include data preparation, algorithmic design, development, deployment, operation, monitoring, and governance. For instance, enhancing robustness necessitates data sanitization, developing robust algorithms, anomaly monitoring, and risk auditing. Conversely, a breach of trust in any single stage can compromise the trustworthiness of the entire system.

Therefore, AI trustworthiness must be established and assessed systematically throughout the entire lifecycle of an AI system.

The journey of trustworthy computing

The concept of trustworthiness in computing has its roots in a significant event — an email that Bill Gates, the co-founder of Microsoft, sent out to all the company’s employees in 2002. This email, a pivotal moment in the history of computing, laid the foundation for the idea of trustworthiness in our digital systems.

“…Trustworthy. What I mean by this is that customers will always be able to rely on these systems to be available and to secure their information. Trustworthy Computing is Computing that is as available, reliable and secure…”

This practice of Trustworthy Computing continues to be adopted by some in the computer science and system engineering fields (e.g., The Institute of Electrical and Electronics Engineers (IEEE) and The International Electrotechnical Commission (IEC)).

The International Organization for Standardization (ISO) and IEEE standard definitions of trustworthiness are built around the concept and Gates’ system trustworthiness attributes:

- trustworthiness of a computer system such that reliance can be justifiably placed on the service it delivers

- of an item, and the ability to perform as and when required.

On March 13, 2024, the European Parliament adopted the Artificial Intelligence Act (AI Act), considered the world’s first comprehensive horizontal legal framework for AI. The AI Act establishes EU-wide rules on data quality, transparency, human oversight, and accountability, further advancing the principles of trustworthiness in AI systems.

Practical approaches to AI trustworthiness

However, the mentioned lists of requirements still seem too abstract for practical applications. While these guidelines provide a foundation for understanding AI trustworthiness, a more pragmatic approach is needed for real-world implementation.

Besides AI practitioners, who should assess AI trustworthiness before working on it, it’s also a good practice for users not involved in the design and development process to evaluate trustworthiness. This helps address any concerns and build trust in the system, even though they did not participate in its creation.

In this context, more practical assessment processes like Z-inspection come into play. Z-inspection is tailored towards the challenges and requirements of real-world systems development and can be used for co-design, self-assessment, or external auditing of AI systems.

This makes it well suited as a general-purpose methodology for assessing and improving the trustworthiness of AI systems throughout their lifecycle.

- In the design phase, Z-inspection can provide insights on how to design a trustworthy AI system.

- The process can be used during development to verify and test ethical systems development and to assess acceptance by relevant user groups.

- After deployment, the process can also be used as an ongoing monitoring effort to inspect the influences of changing models, data, or environments.

Z-Inspection is a method that thoughtfully combines two established approaches:

- A holistic approach, which seeks to comprehend the entire system without focusing on its individual components, and

- An analytic approach, which carefully examines each component of the problem domain.

This integration aligns with the perspective of authors of the paper “Responsible AI — Two frameworks for ethical design practice”:

“…evaluating the ethical impact of a technology’s use, [is] not just on its users, but often, also on those indirectly affected, such as their friends and families, communities, society as a whole, and the planet.”

A step-by-step guide to Z-Inspection

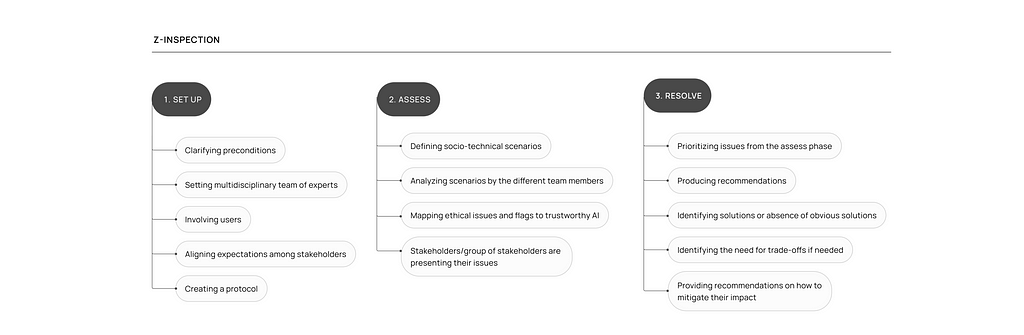

The process consists of three main phases:

- The setup phase, when the preconditions are clarified, identifying the assessment team and agreeing on the boundaries

- The assessment phase, when the AI system is analyzed

- The results phase, when the identified issues and tensions are addressed, and recommendations are produced.

The Set Up phase

The Set Up phase clarifies some preconditions, sets the team of investigators, helps define the boundaries of the assessment, and creates a protocol.

Z-inspection process starts with assembling a multidisciplinary team of experts to address AI’s implications. This team should include domain experts, technical specialists, users, legal advisors, and ethicists. The domain expert verifies the AI’s foundational assumptions, while the technical expert ensures proper implementation. Users contribute with voicing concerns regarding the system, and legal and ethical experts guide the team through potential challenges.

Aligning expectations among stakeholders is crucial, including clarifying who requested the inspection, and how results will be used (e.g., public promotion, system improvements, or communication with end-users).

This phase also involves defining assessment boundaries, recognizing that AI systems operate within a broader socio-technical context. Thoroughly assessing this environment is needed for a comprehensive evaluation.

The Assess phase

The Assess phase is composed of four tasks:

- the analysis of the usage of the AI system;

- the identification of possible ethical issues, as well as technical and legal issues;

- mapping of such issues to the trustworthy AI ethical values and requirements;

- the verification of such requirements.

The first step of the assessment phase is to define socio-technical scenarios that describe possible everyday experiences of people using the AI system. These scenarios will be used to anticipate and highlight possible problems that could arise. They are based on available information provided by the vendor, personal experience of the participants and the boundaries of the assessment as defined earlier.

Next, these scenarios are analyzed by the different team members. Each team member is encouraged to contribute their own perspective, as diverse backgrounds can offer complementary viewpoints. For instance, an AI engineer will emphasize different aspects of the system compared to a radiologist, and their focus will differ from that of a nurse.

Each participant or a group of participants with a common background then describes the issues they see with the system from their point of view and provides evidence as to why these issues are actual problems. This evidence can come in various forms, such as established best practices, scientific reports, or conflicts between observed behaviors and vendor system descriptions.

The next step involves identifying issues that conflict with trustworthy AI requirements, highlighting tensions between system behavior and trustworthiness. This process helps emphasize the impact of these issues on the AI system’s trustworthiness.

Afterward, consolidate the identified issues. Each stakeholder or group presents their findings, allowing for combining related issues to create a concise list.

The Resolve phase

The Resolve phase tackles the ethical, technical, and legal issues identified earlier, addressing ethical tensions when possible. Participants collaborate to discuss and prioritize these issues, seeking feasible resolutions. During this process, potential conflicts between principles may arise, depending on the system and specific issues. In such cases, an ethical AI maintenance plan over time may be recommended.

One of three cases can occur.

- The tension has no resolution.

- The tension could be overcome through the allocation of additional resources or

- There’s a solution beyond choosing between the two conflicting principles.

In some cases, an obvious solution to the conflict may exist. However, other cases might require a trade-off or may have no feasible resolution. At this stage, participants directly address ethical tensions and discuss trade-offs with stakeholders, offering recommendations to mitigate potential impacts.

Final thoughts

I didn’t originally plan to delve into assessing the trustworthiness of AI systems. My initial focus was on how designers can help users overcome skepticism and embrace new technologies. But as I dug deeper, I realized that making sure the system is genuinely trustworthy is far more crucial than just being perceived as such.

The ethical and societal implications of AI systems raise significant concerns. And, unfortunately, there’s a lack of accessible information for both designers and everyday users on how to evaluate AI trustworthiness..

If you have experience in assessing the trustworthiness of AI systems, I’d love to hear about the methods you’ve used. Sharing your insights can help us all work towards a more transparent, fair, and secure future for AI technology.

The Z-Inspection: a method to evaluate an AI’s trustworthiness was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

Leave a Reply