Intro to B2B design: How to educate users about difficult or complex concepts

Why “it just works” doesn’t work.

“Just Do It” — Nike

Last week, at Apple’s annual launch event, we were treated to what is now a common sight: an array of dazzling, shiny new pieces of hardware. The new iPhone Pro treats us to the slickest-looking shell to ever wrap a smartphone. The Apple Watch looks better than ever with new (definitely-not-leather™️) bands. More exciting for us software developers were the interfaces we saw introduced, ones not even mentioned at WWDC. For the iPhone, it was the new action button. For the Apple Watch, it was the new Double Tap, something that allows one to trigger any arbitrary action with a flick of the wrist.

It looks shiny.

It looks fun.

It all looks so nice…

…but it’s also useless.

Features without context, without direct applications to the problems they solve, are useless. Though they were accompanied by some demonstrations, such as through promotional videos on Apple’s website and snazzy snippets during the release conference, their direct application to the problems we face in our daily lives is missing. This means that the goal of personal technology — to make us better problem solvers — remains unfulfilled. That’s because there’s a piece missing. If we can find that piece — our missing link — and put it back where it belongs, we’ll all be better problem solvers for it.

The Full Chain

Though the mythology of personal computers is a long and misty one, the work of Douglas Englebart and his colleagues stands out as defining. In their 1972 piece, Augmenting Human Intellect: A Conceptual Framework, the imaginative foundation was laid for what eventually became the personal computer. Its purpose? To aid individuals and groups in “comprehending complex situations, isolating the significant factors, and solving problems.”

The framework Englebart and his colleagues proposed was made of four pieces: “a trained human being together with [their] artifacts, language, and methodology.” That is, the problem solver (the human) would describe and conceive of a problem using a language. They would then proceed to solve that problem by wielding artifacts according to some methodology. It’s a beautifully concise and expressive framework. For more on that, see my article The Unfinished Revolution, or, better yet, check out the original work.

Englebart places particular emphasis on the training portion, though. He uses the example of an ancient human, flung into the present, being asked to drive a car. “While [he] cannot drive a car through traffic, because he cannot leap the gap between his cultural background and the kind of world that contains cars and traffic, it is possible to move step by step through an organized training program that will enable him to drive effectively and safely.”

Let’s make this concrete. The iPhone is an artifact — nothing more. This year, we were provided with artifacts that have vaguely different properties compared to our old ones. That these changes should help us be better problem solvers is only the tail end of a vague implication. The reasons why are swept under a rug labeled “it just works.” Their guiding assumption is this: if our artifacts can be made simple enough and intuitive enough, then we humans can apply all of our existing methodologies, language, and training to our new artifacts to solve our problems. This is exactly the opposite conclusion of Englebart and his colleagues. There’s no “moving step by step through an organized training program” and therefore no expansion of our libraries of problem/solution pairs. Frankly, it’s lazy.

It’s not lazy from a product development perspective. I have nothing but the deepest respect for the engineers at Apple. Furthermore, this optimization is hard work. In his book The Design of Everyday Things, Donald Norman argues for exactly this kind of “training-less” optimization in human-computer-interfaces, citing benign examples of door handles and terrifying stories of failures at nuclear power plants. If not done right, systems that attempt to conform to the constraints of existing expectations can be difficult to use, frustrating and, in some cases, dangerous.

But where is the optimization in a double tap? Where is the efficiency of an action button? They are produced when we use them to streamline existing workflows or produce entirely new ones. How do we do that? Which workflows should we optimize? Beats me. And watching a video of somebody buying apples at a Santa Barbara farmers market won’t help answer these questions.

The Misalignment

Why is this the case? Why do we have systems that are so thoroughly researched and designed for their capabilities to optimize workflows and make us better problem solvers … when the training to use them doesn’t exist? It’s because the incentives are misaligned.

Apple Computers, and just about every other technology vendor on the planet, is incentivized to produce their products according to market considerations. Some are simple some are complex. Though ostensibly (and I think oftentimes genuinely) motivated by a desire to make our lives better, they can only do that if they keep selling new iPhones every year. This requires some kind of “reason why” advertising — some improvement or killer feature that motivates us to purchase a new product. That means that the event technology vendors optimize for is the purchase. This can take different forms and becomes more complex when you consider retention cycles and so on. However, the optimization is the same.

Conversely, the events we want to be optimizing for are problem instances — individual instantiations of the classes of problems we encounter in our daily lives. Now, technology vendors claim that they are optimizing for this event, as well. After all, won’t we keep buying something if it keeps solving more of our problem instances? Well, maybe. But if this were so — if the two incentives were really aligned — why is there so little training? The fact that such a vital piece of our problem-solving architecture is missing from our relationships with technology vendors is evidence of a misaligned set of incentives. Building systems that “just work” is good for sales and for a narrow set of universally shared, preexisting, shallow problem domains. It’s not a good policy for becoming better problem solvers.

Below the Curve

Some will say that this is simply a problem of learning curves. A large larding curve is imposing. A smaller learning curve is easier to overcome, and thus easier to sell. However, learning curves aren’t the problem.

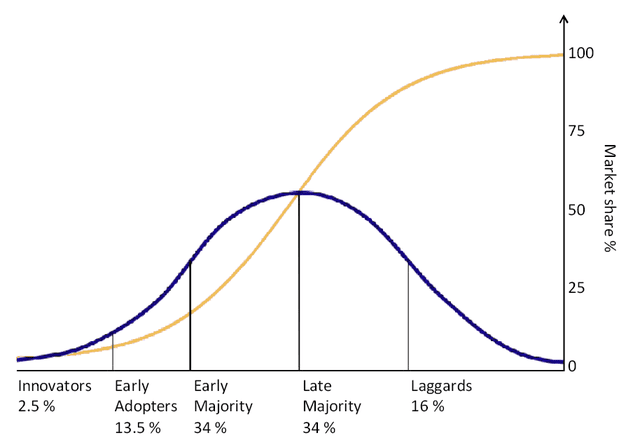

Most tool makers are actually okay with large learning curves. They just rely on psychological factors to overcome them. Many vendors rely on a principle known as the “adoption curve” to anticipate, explain, and encourage the adoption of truly provocative technologies — including ones that don’t simply cater to existing human habits. The theory goes this way: if you introduce a tool that requires a fundamental reevaluation of the way someone interacts with their personal technology, most people aren’t going to get it. Most people won’t want to overcome their existing habits of interaction to engage in such in-depth training. However, some people will. According to this graph, it’s only about 2.5%. However, the result is (again, in theory) a cascading reaction, where each willing adopter influences and convinces an adopter just slightly behind them. The adoption curve has been used to explain the introduction of a variety of technologies, with learning curves big and small.

I like this idea. It’s something along the lines of “the tail wags the dog” and it’s superficially similar to experimental results in Sociology. Erica Chenoweth, studying social movements, drew similar conclusions after reviewing data on over one hundred years of societal changes. Her number was 3.5%.

My favorite, recent example of the adoption curve in action is the Arc Browser. When they launched, it was with a fundamentally different etymology and ontology for browsing the web. The most intrusive change was this: tabs don’t belong to windows, they belong to “spaces” which are independent of windows and persist even after you close a window. This was weird. Initially, even I was put off by it. Opening the same tab in two windows could result in some unintuitive behaviors, such as accidentally closing it in both locations.

When you look at their rationale, though, it’s brilliant. Arc is iteratively creating an “internet computer”, where the tools we need in our daily lives are organized according to their context. Your work tools live in your workspace, your personal bookmarks live in your personal space, and so on. What’s more, these contexts are available independent of the device you access them on. The goal is to abstract the role of individual devices and bring us that much closer to what I like to call Intentful Computation. It’s a beautiful model of computing.

Anyway, Arc ditched this feature back in June. Their rationale at the time was that it was causing too much “confusion” for users. One could read here: “it had too high a learning curve.” However, the 2.5% spoke up in its defense. Arc republished it on August 25th. As it grows, more and more of us will “get used to” the way Arc behaves, slowly training ourselves to work with a new, empowering tool. We may already be speeding along the adoption curve if the attention paid to Arc by its competitors is any indication.

It might be that this slow, organic, and unstructured method of training is the only way to get people on board with new, radically powerful tools. But what if we baked training into the way we interact with technology vendors? What if, instead of throwing out the manual when we receive a new device, we made it the most exciting part of the unboxing?

Breaking the Cycle

What I’m proposing is the technological version of a gym coach. Let’s call this role a computational coach. It’s someone you consult with. Their job is to familiarize themselves with the general principles of common problem domains. In addition to that, they should be familiar with basic computing principles, the workings and fruitful applications of a wide variety of tools, and their combination. Their guiding principle would be this: what problems do my clients encounter and what computational processes can I help them build to solve them?

There are already people out there who run their lives with Apple’s Shortcuts and customized combinations of computational tools. There are whole sites dedicated to sharing recipes with them. We tend to call these people “power users,” and there are certainly features that cater to those sorts of folks. Computational coaches would probably be cut from this cloth. However, they would have to apply all of the empathy and psychological tools of the pedagogical disciplines to help their clients. Computational coaches certified in various computational models could then help us integrate new tools when they are released.

Imagine, if you will, unboxing the iPhone 16. During the unboxing process, you’ve scheduled a call with an official Apple Computational Coach. You chat during the unboxing process. They learn that you love traveling and have two cats. You chat some more and they show you all of the bells and whistles characterizing this year’s model. However, because of this personal approach, they’re able to help you by demonstrating a workflow that never would have occurred to you — using a combination of new services in the Shortcuts and Home apps to automatically trigger your cat feeder when you’re away from home.

Like any coach worth their salt, though, they would prompt you to grow. Maybe this involves ideation sessions, where the coach and client share ideas for automations and the healthful applications of computational thinking. They would then message you and hold check-in sessions, to see how well you’ve practiced these new methods.

There are teams out there, Apple included, creating beautiful tools for thought. Their use, however, often requires the understanding and application of very specific, sometimes esoteric computational paradigms. Facility with these tools requires the same level of granular, targeted practice we would apply to any other complex tool. This training is lacking.

To get the ball rolling and help more of us acquire this training, we need to realign our incentives, such that computational vendors are motivated to ensure that the people consuming their products are using them as they were intended. Computational Coaches are one idea. Regardless of the specific services that are innovated to provide training, it’s clear that we need to tweak the narrative. “It just works” isn’t enough, so we should stop propagating it as a one-size-fits-all method of product development. If we get this right, it will allow normal computer users to craft the systems that will enrich their lives, instead of forcing them to wait for some software engineer somewhere to do it for them.

Being better problem solvers takes hard work. However, like any coach will tell you, that’s a necessary step to growth. So let’s do it. Just do it.

The missing link in Apple’s event was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

Leave a Reply