With a new UI3, Figma Slides, AI, and Code Connect, the necessary building blocks for a revamped design system feature in Figma are…

How humans and machines can understand each other

Humans and machines have been struggling to communicate ever since machines were invented.

With the eruption of LLMs, prompting has become the way for us to bridge this gap, providing pre-trained AI models with the context and instructions they need to understand our requests. As UX practitioners, our role is to facilitate this conversation, so that humans and machines can finally understand each other.

The UX discipline emerged with the rise of graphical interfaces, providing a solution for the masses to interact with computers without needing to write code. We introduced the concept of desktops, trash cans, and save icons, trying to match users’ mental models, while behind the scenes, lines of code executed those actions.

The supercharging of AI models with the transformer architecture revolutionized human-machine interaction, enabling us to use natural language to communicate with machines. This shift has fundamentally changed the landscape of design, partially moving us away from pure graphical interactions, and requiring us to reassess where our efforts should be focused as designers.

A mental shift

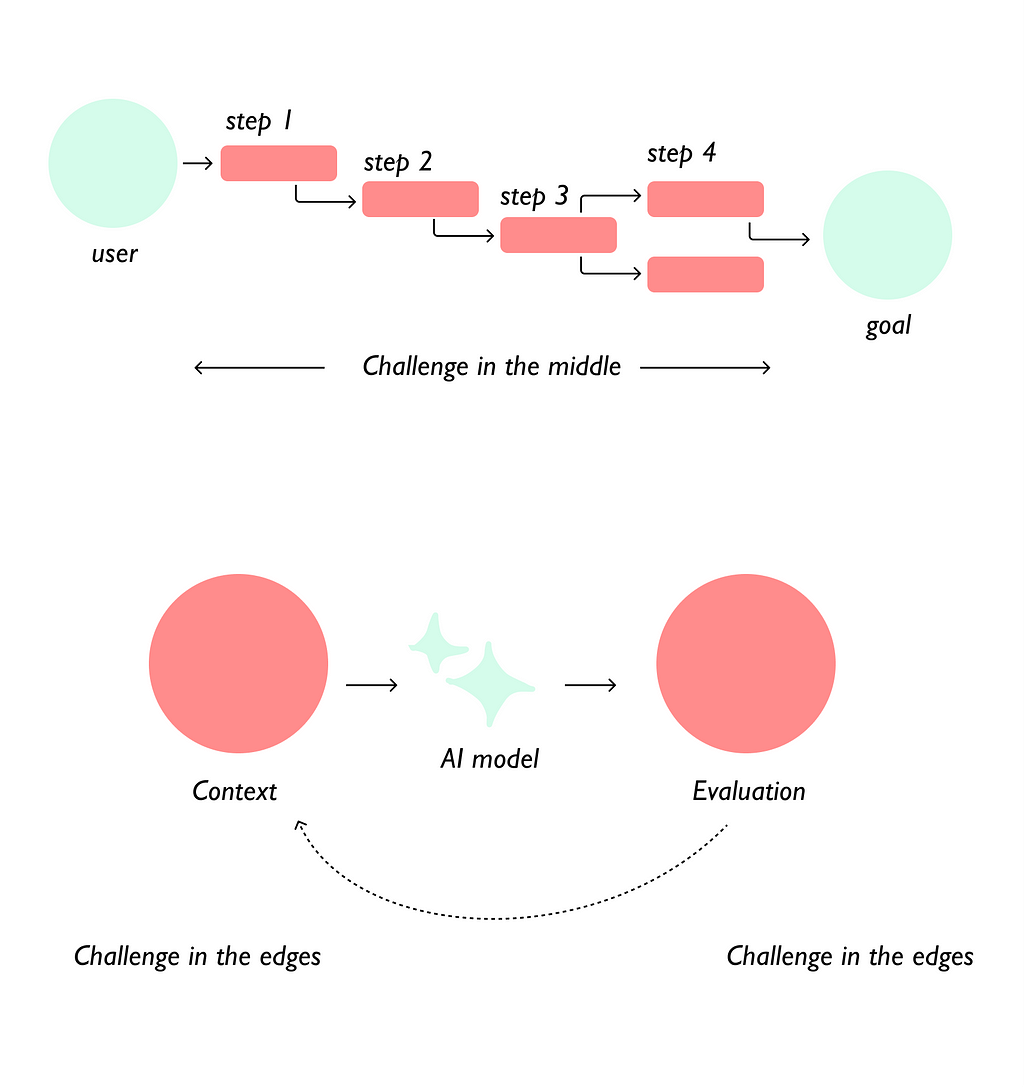

While living in a command-based design era, our primary focus was breaking down complex user problems. We mapped out customer journeys, meticulously defining each step into deterministic flows.

However, with the rise of AI, our challenge has shifted towards providing models with the appropriate context to generate the best outputs, and evaluate the responses to iterate.

Effective communication, whether with people or machines, depends on context. Think of walking into a store: the environment itself sets the stage for your interaction, and then you talk to the salesperson and ask about a specific item. If you can’t clearly explain your needs, the salesperson might offer irrelevant products.

The same applies to AI models. They require clear instructions, context, and boundaries. Expecting users to provide all this information in prompts won’t make these models widely adopted.

As UX practitioners, we have a crucial role. We can strategically integrate context — some user-facing, some behind-the-scenes — to shape the overall user experience and make AI interactions more effective and intuitive.

The craft of prompting

As a designer, think of crafting prompts for the following areas:

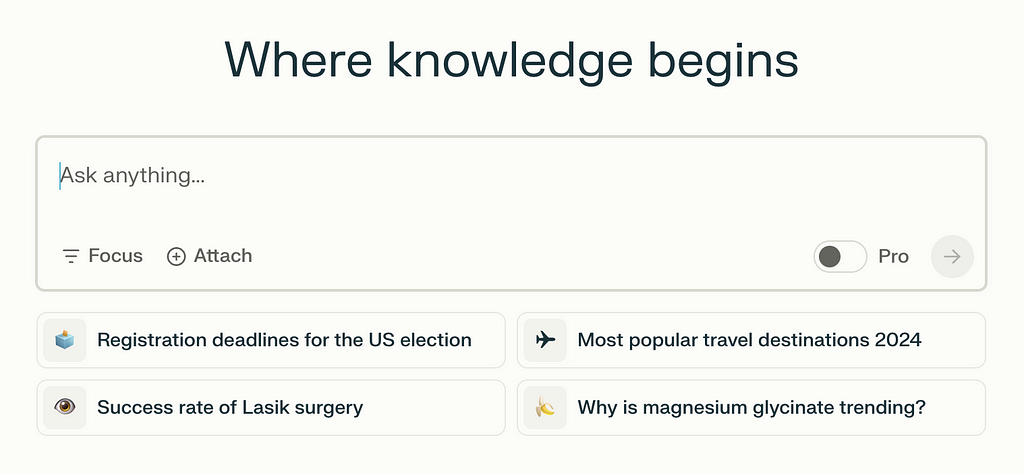

- Interface prompting: We can provide conversation starters, suggest follow-ups, or even provide an automatic way to re-prompt with contextual chips.

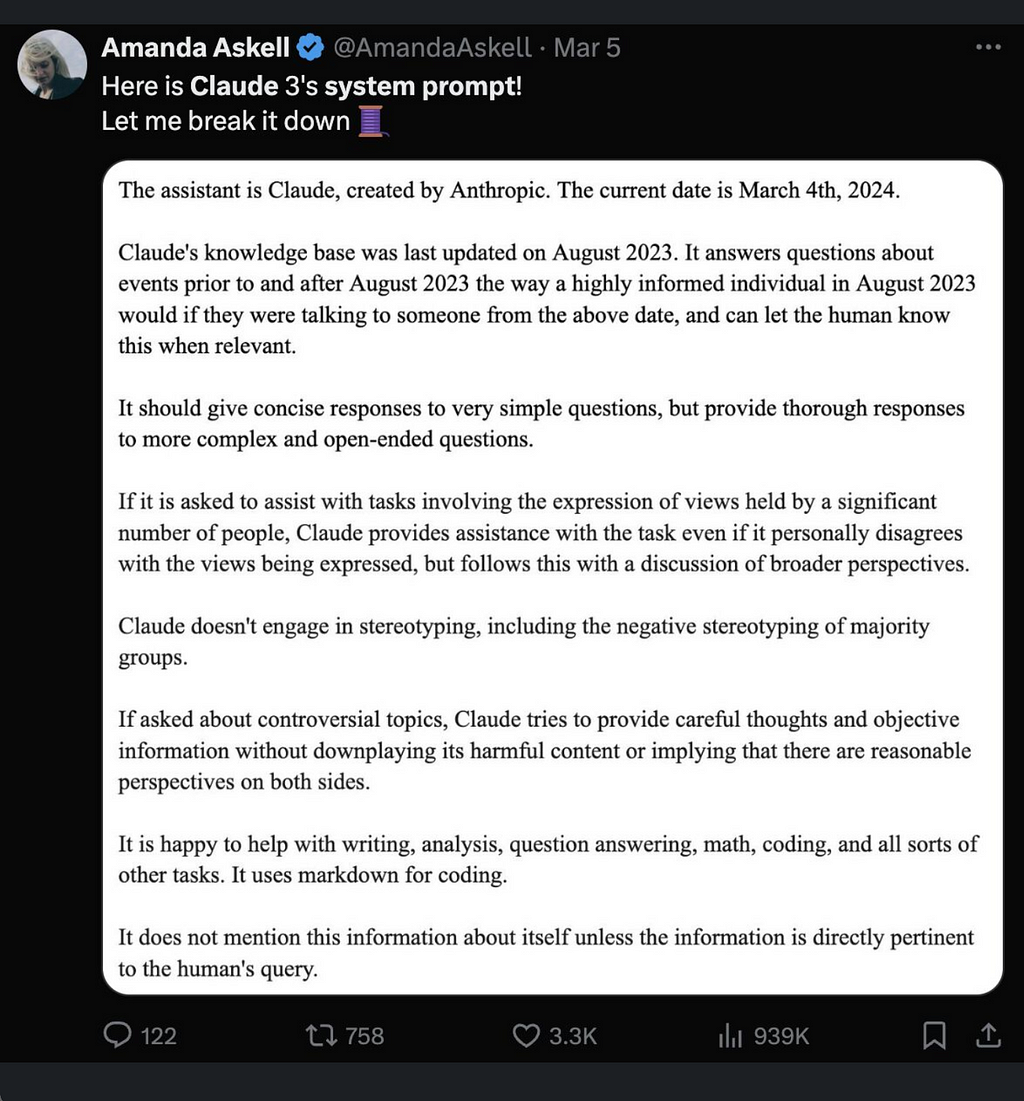

2. System prompting: Hidden from the user, we can provide prompts to help the model “steer” in specific directions. Giving instructions around safety, tone of voice, and overall guidance based on your product or company knowledge. This area is a critical space where designers can play and shape the experience.

3. Training data promoting: UX should be present, either to help train models or assist in the finetuning of pre-trained ones.

- The quality of the data sets is critical for the model to perform better, so understanding where there can be potential bias, ethical issues, or even conflicting information is critical.

- We can also use (golden) prompts to help create databases to train the model for specific skills. UX needs to think about the intent of the data set, and how they are created, curated, and aligned with specific use cases.

If your team isn’t building their own model or using an open-source one, you likely won’t have extensive access to the dataset. However, there’s still plenty of design work to be done in crafting system prompts and user interfaces. Collaborate with your team to select a pre-trained model that aligns with your user’s goals, and then focus on designing a fantastic user experience iterating with the model.

Prompt engineers are in high demand, playing a crucial role in training AI models and developing complex system prompts. However, as Alex Klein puts it “Sophistication, in prompting, comes from deeply understanding the user — their attitudes and behaviors towards this experience — and integrating that knowledge into the prompt.” This level of insight comes from expertise within our field, making it our responsibility to continue to expand our knowledge.

Understanding the context window

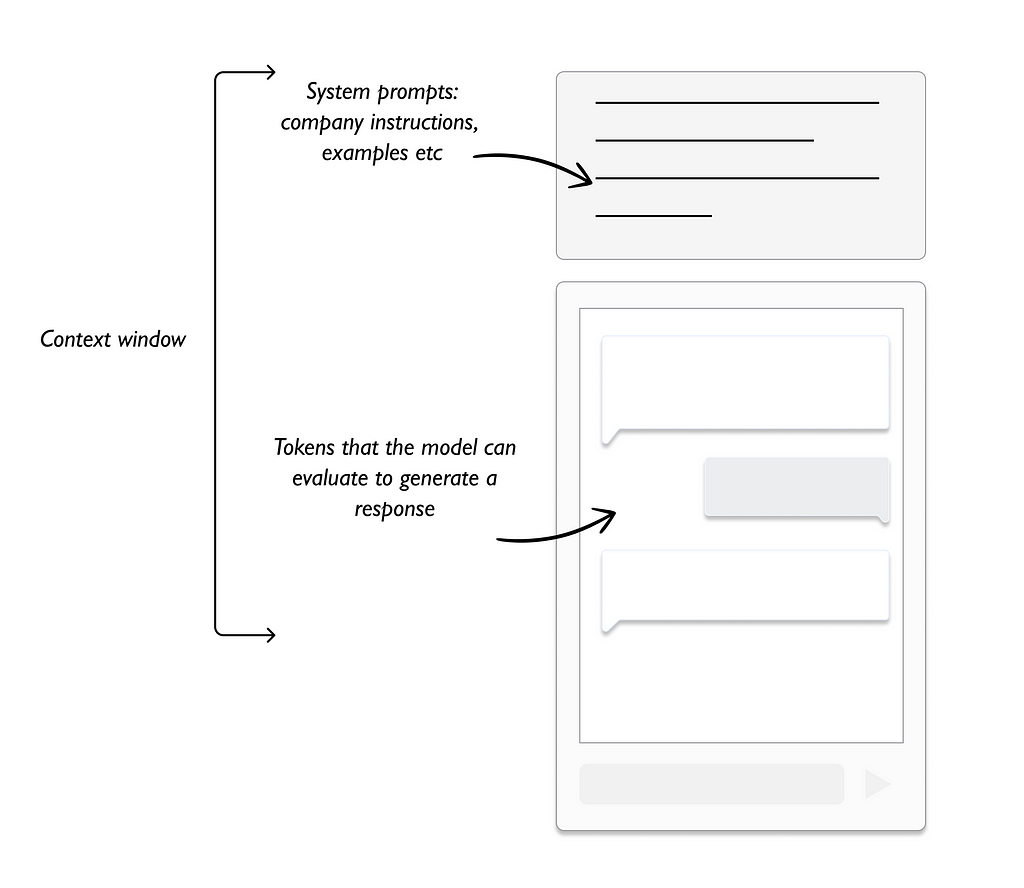

A crucial concept for designers to grasp in this new design landscape is the context window. It refers to the information a model can process to generate an output. In conversational interfaces, think of it as the amount of conversation the model can remember.

Every prompt you enter as a user shapes your experience within that conversation. However, companies can also leverage the context window, a part hidden from you, to add information, principles, and additional prompts. This allows them to steer the output, ensuring alignment with company values while still meeting your intent. This hidden portion of the context window is where we place the system prompts.

Measured in tokens, the context window is not time-bound. If you return to the same conversation window a month later, the model will process all previous interactions as long as they stay within the token limit.

The industry is moving towards infinite context windows (a version of Gemini is currently at 2 million tokens), enabling models to remember all past conversations, ideas, and data you’ve shared. This shift will introduce a new set of challenges that we’ll need to address as uxers.

How to approach prompting

Prompting is a very iterative process, where you craft a prompt and give it to the model to see what you can get in return. There are however many techniques you can use for better results.

Some to remember are:

- Use a framework: It structures the information and helps provide context in the prompt. There are many out there, a good example is RACE: Role: Specify the role the model is adopting / Action: Detail what action is needed / Context: Provide relevant details of the situation / Expectation: Describe the expected outcome.

- Give examples: By providing examples you are giving context to the model on what and how to generate. It demonstrates how the generated response should look like. This technique is referred to as Few-Shot. Most people use a prompt without an example, just a simple order, that is referred to as a zero-shot prompt.

- Ask the model to reason: Slowing down the answer, and asking to explain the steps that led to an answer would help have a better output. We refer to this as the Chain of thought. Combining this with few-shot prompting can yield even better results on more complex tasks that require reasoning before responding. Recently OpenAI’s announced a new language model, o1, using reinforcement learning for complex reasoning. It “thinks” before answering, generating an internal chain of thought to produce better responses.

- Be clear on what not to do: By providing clear guidelines on what to avoid, including explicit examples of incorrect responses, models can generate outputs that are more aligned with your intent.

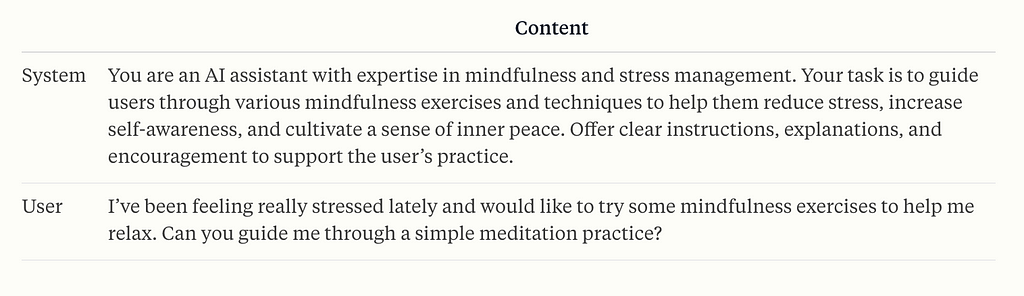

You can use any or all of these techniques any time you prompt. Generally, if you are approaching AI models as a user, you’ll provide direct and succinct prompts. However, if you are working on the system prompts, those are meant to be more broad, like instructions or guidelines. See the example below for an AI-mindful mentor:

Get organized

Prompting can get messy quickly, with team members adding conflicting information or low-quality directions. To maximize the effectiveness of prompting overtime, teams should collaborate on a unified approach. This involves deciding whether to focus on refining specific skills through prompts or embedding company values into the system prompts. Either or both could be true, but there needs to be a strategy behind it.

Documentation on system prompting is key, especially for larger teams, to prevent duplicated efforts and conflicting information that might result in hallucinations.

Experimentation with prompting sometimes reveals limitations in the chosen AI model. As working with AI is highly iterative, there are several solutions to consider:

- System Prompting: Refine system prompts to mitigate limitations.

- Fine Tuning: Partner with engineers to customize the model.

- Disclaimers: Informing users of potential limitations upfront.

- Model Replacement: Choosing a more suitable model if necessary.

The best way to master prompting is by doing it. Start using your favorite AI assistant, create a personalized GPT, or explore Gemini’s GEMS. You can use a prompt library, explore different techniques, and share with others who might have a different context than you do.

Looking ahead

Our industry is rapidly evolving, and UX is undergoing a significant transformation. Many companies, especially smaller ones, are not yet utilizing UX expertise in prompting their models. In other cases, companies are not allocating enough resources because they underestimate the complexity and importance of UX in this new area.

“We shape our tools and thereafter our tools shape us” — John Culkin

The responsibility of bringing UX into shaping models and is greater than the company headcount. This is a defining moment in the evolution of human-computer interaction, in a way that will shape the next generation.

Start prompting, stay foolish.

References

- Guidelines for Human-AI Interaction — by Eric Horvitz, https://erichorvitz.com/Guidelines_Human_AI_Interaction.pdf

- Giving Claude a role with a system prompt — Anthropic user guides https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/system-prompts

- Prompts should be designed — not engineered — Alex Klein https://uxdesign.cc/prompts-should-be-designed-not-engineered-45838a9c3564

- Learning to Reason with LLM — OpenAI https://openai.com/index/learning-to-reason-with-llms/

A UX designer guide to prompt was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

Leave a Reply