Usability is about human psychology, not technology.

For a long time, digital user experience (UX) has been focused on flat, rectangular, physical screens. Websites and mobile apps have reigned supreme when it comes to designing interfaces and interaction experiences.

But the world is changing. Quickly. At the time of writing, we’re seeing the:

- revolution in artificial intelligence (AI), from OpenAI and Google interfaces capable of generating text, images, music and video, to hype around products like the Rabbit r1 and the Ai Pin by Humane — perhaps early glimpses of future AI assistants.

- growth of the augmented/virtual reality (AR/VR) market, from industry-leading spatial computing headsets like the Meta Quest and Apple Vision Pro to smart/AR glasses emerging as the next big thing in wearable tech.

- development of brain-computer interface (BCI) research and potential applications, including (ideally non-invasive) use cases for medicine, marketing, education and games.

If you’re a ‘traditional’ UX/product designer, it might seem like the relentless pace of emerging technology is leaving you behind. You might have even wondered:

How can old-school design principles be relevant in a world of futuristic tech?

But here’s the thing: while new technologies require learning new technical skills, the knowledge that actually makes you a good designer — such as understanding design principles — is universal. It’s future-proof.

In fact, the principles of design might actually be more relevant to emerging technology products.

So it’s probably a good point to start talking about them.

The principles of design

The seven fundamental principles of design were introduced to the world by Don Norman in his book The Design of Everyday Things, which has become essential reading for UX designers.

In this seminal work, Norman argued that products are usable if they follow these seven principles: good discoverability underpinned by affordances, signifiers, feedback, mappings and constraints, within the context of a conceptual model.

Here’s what each of those principles are about, in a nutshell:

1. Discoverability

Discoverability describes how easy it is to understand what interactions are possible with a product.

Usability should be intuitive. For example, when you load up a website it should be obvious what it’s for, who it’s aimed at, how to use it, etc.

Good discoverability is about making it obvious what users can do with a product without additional context. The easiest way to design for this? Follow established patterns and conventions so the product feels familiar to users (Jakob’s Law).

But discoverability isn’t actually a thing in itself, so much as the cumulative effect of those five supporting principles: affordances, signifiers, feedback, mappings and constraints.

2. Affordances

Affordances are the actions that are possible within the system or product.

The concept of affordance describes the the relationship between the properties of a product and capabilities of the user.

That’s a very technical, nerdy way of thinking about design principles, though. In simple terms, we can think of affordances as what you can do with a product — how to operate and interact with it.

For example, a mobile app might afford actions like scrolling, swiping, tapping, typing, searching and navigating. The app has properties, and users have the capability to do them.

3. Signifiers

Signifiers are the perceivable signals that indicate to users what actions are possible, and how they should be done.

For UX designers, signifiers are more important than affordances because they tell the user how to use the design. All the functionality in the world is worthless if people don’t know how to use it.

Some classic examples of signifiers you see all the time in typical user interface (UI) design include:

- arrows signifying users can scroll, swipe or drag to refresh

- buttons indicating that pressing them will perform an action

- icons suggesting the type of action to be performed in that area of the screen, e.g. a magnifying glass for search

4. Feedback

Feedback alerts users to the result of their actions and the current state of the product.

If signifiers tell users how to use a design, then feedback tells them what’s happening when they interact with it. There are two concepts in play here, so let’s look at them individually:

- Result of user actions: We need to know the design is responding to our clicks and taps. For example, links changing colour when we use them — this feedback tells us the product or system is registering our interactions, and reassures us the design is working as expected.

- State of the product: We need know when the overall state of the product or system has changed. For example, receiving an ‘order received’ message during an online purchase — this feedback reassures us the status of the transaction has changed from in-progress to complete.

It’s important that feedback is immediate, but planned appropriately so important information is prioritised. (If you tell users too much at once they’ll get overwhelmed and probably ignore everything.)

5. Mappings

Natural mappings help users to better understand the relationship between controls and the result of their actions.

‘Mapping’ means using spatial correspondence between the layout of controls and the product being controlled.

A classic example of mappings in product design is the layout of a cooker hob corresponding to the layout of the hob controls, or the arrangement of light switches matching the actual placement of lights in the room.

In UX design, an example of natural mappings that spans different devices is scrolling: as a user you can swipe up or down using a laptop trackpad to scroll up or down a webpage; similarly, you roll a mouse wheel up or down to perform the same action.

6. Constraints

Constraints help prevent slips and mistakes by removing opportunities for errors.

The concept of constraints is initially counter-intuitive. But it turns out limiting user interactions is actually helpful rather than just annoyingly restrictive.

It’s also easy to overlook adding constraints to a design, because you design products to be used correctly. But the reality is users get tired, distracted and easily make mistakes. Or they might confidently use a product incorrectly. In either case, we can minimise errors by adding constraints.

It’s beyond the scope of this article to explain the different theoretical types of constraints (logical, semantic, cultural and physical). But generic examples include things like asking for confirmation before users clear or cancel something — avoiding a mistake through an accidental slip or mis-click — or restricting logins to trusted third-parties for security reasons.

7. Conceptual model

A simplified explanation of how something works, and it doesn’t matter if this approximation is accurate as long as it’s useful.

The final piece in the design principles puzzle is the conceptual model. The idea is that users need some relatable model of how a product or system works to be fully confident and comfortable using it.

Take the modern smartphone: it’s not necessary for users to understand how it works on the level of an engineer or software developer. All they need to know is that it’s a phone, which also acts as a camera, calculator, notepad and hundreds of other things. The conceptual model is simply that it’s a helpful, entertaining multi-functional device.

Approximate conceptual models of products are useful, in the same way as simplified mental arithmetic (e.g. 10 x 40 instead of 9 x 42) — you don’t get the absolutely correct answer, but for practical, everyday situations it’s typically good enough to be useful.

Why these principles work — and why they always will (probably)

Don Norman’s fundamental principles are about users, not products.

Users are human, and humans don’t change much from generation to generation. The speed of technological revolution is far outpacing human evolution. In fact, our brains are not much different to those of our ancestors who existed tens of thousands of years before we even settled down to start farming and founding the first civilisations.

What makes products usable is how well they’re designed to meet our human need for understanding, usefulness and control. Every emerging technology is simply a new challenge to design for these psychological requirements.

This is what usability is all about. It’s what it’s always been about, and will be far into the future — as long as humans are still recognisably human.

It’s why Norman’s design principles are relevant whether we’re talking about the earliest iron age tools, modern smartphones, interacting with AI companions like Joaquin Phoenix in the film Her, or living in an Oasis-style metaverse like Ready Player One.

https://medium.com/media/331cd9e445d205569b418cf11d22d227/href

In fact, there’s a parallel here with all fundamental UX theory, such as Jakob Nielsen’s ten usability heuristics for interface design. Originally published in 1994, these ‘rule of thumb’ guidelines are still relevant — for the exact same reason as Norman’s design principles: they’re based on psychology, not technology.

What I think all of this means is:

There’ll still be a role for UX designers long after Figma dies and the last iPhone has been discarded on the e-waste mountain of doom.

If you do a search for ‘UX is dead’, you’ll find plenty of results. But despite frequent obituaries proclaiming the death of UX, we’re actually just existing in a single moment of the user experience design story. The tools might change, the products might change, but the users don’t. We don’t. And so the principles that define good UX design will always be relevant.

Applying design principles to emerging tech

Whether the future really is AI assistants pinned to your chest, living life through extended reality (XR) headsets (or glasses, or contact lens), or perhaps every table, wall and surface doubling as a user interface, UX design principles apply:

- Discoverability: Users will still need to understand what interactions are possible. This is especially important for emerging tech — how can innovative new products be successful if people don’t know how to use them? Conventions will emerge, and designers should follow them.

- Affordances: Users will still need actions that are possible within the system or product. Except that in future these will extend beyond mouse clicking and screen tapping to eye focus, body movements, facial expressions, hand controls and voice input.

- Signifiers: Users will still need to be told how to use the design. But in some cases this might move from visual signifiers to audible alternatives. For example, AI assistants explaining how to use the product or system — prompting and suggesting actions.

- Feedback: Users will still need to be alerted to the result of their actions and the current state of the product. This could include visual feedback from familiar UI screens replicated in virtual space, haptic sensory feedback like vibrations through wearable tech, or audible feedback like voice, music or sound effects from our portable AI assistants.

- Mappings: Users will still need help to better understand the relationship between controls and the result of their actions. An obvious example of this is mapping hand-held controllers to a VR environment — users can see digital silhouettes of their controllers (and hands) in VR that correspond to their external, real-world orientation.

- Constraints: Users will still need help preventing slips and mistakes by removing opportunities for errors. This is a huge challenge for XR designers, as they have all the virtual environment considerations as well as keeping people safe in the real world — for example safe zone boundaries help users avoid bumping into objects (or people).

- Conceptual model: Users will still need simplified approximations of how things work. For example, thinking of AI assistants as fast, intelligent but slightly naïve human helpers who lack real-world experience. Remember: it doesn’t matter if this is accurate, as long as it’s useful.

Are there any future scenarios where design principles might not be relevant?

Possibly. It depends how you look at it.

The potential issue with brain-computer interface (BCI) technology, for example Elon Musk’s Neuralink, is that it has the ability to remove any perceptible interface: you’re not necessarily thinking first, then performing an action through a UI — your thoughts and actions theoretically become indistinguishable.

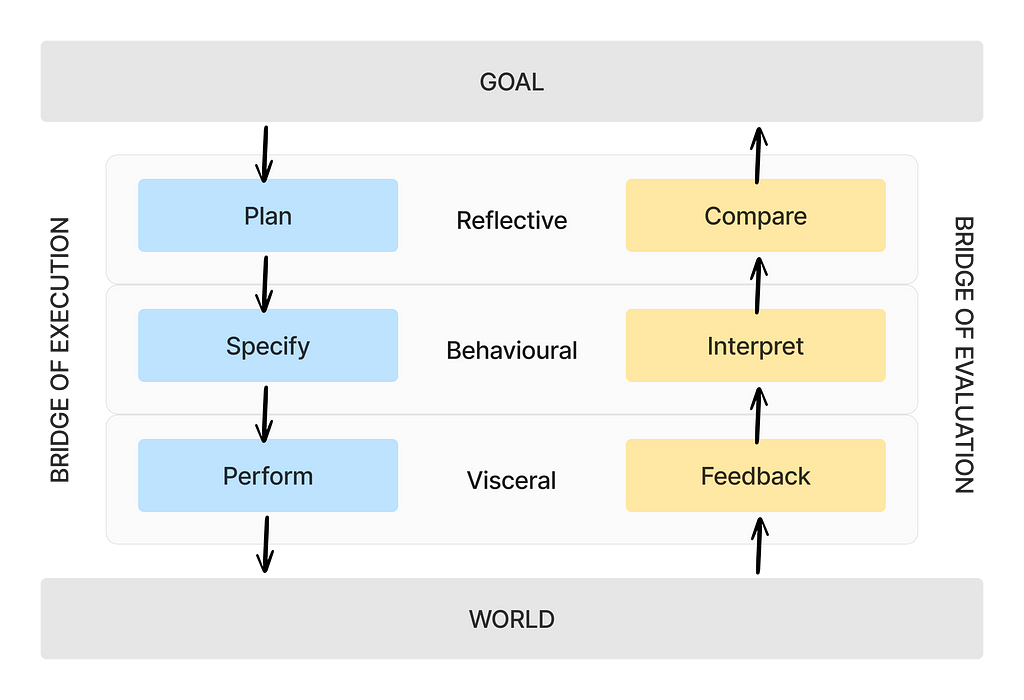

Effectively, BCIs have the potential to bypass the entire left-hand side of Don Norman’s Seven Stages of Action Cycle. This cycle provides a model for how we consciously plan then act (the ‘Bridge of Execution’) and interpret the result of our actions (‘Bridge of Evaluation) in the pursuit of a goal — from starting a car to installing some software.

With BCIs, you could just ‘think’ your goal, then evaluate if it’s been met. This potential shortcut is great for disabled people using BCIs to live more independently, but slightly more problematic if you’re using your brain signals to drive vehicles, operate machinery or fire weapons.

The problem with some BCI scenarios relating to design principles is that you’re not using the technology so much as you’re integrated with it. Does the concept of ‘product’ and ‘user’ break down slightly at this point? Or is it just a boss-level challenge for ensuring design principles are followed? (If it’s the latter, constraints may become the priority design principle — ensuring ‘checkpoints’ between thought and action for safety and ethical reasons.)

I think the concept of intention is key when deciding if the principles of design are relevant to futuristic technology. Humans need to be actively and intentionally using products for design principles to apply. We need to be in control.

If we’re simply surrounded by an “amorphous computational substance permeating the environment” — hat-tip to human-computer interaction (HCI) legend Alan Dix for that wonderful description—which autonomously makes decisions for us, once again the product/user model comes under threat.

While it might be helpful for our homes to be filled with sensors and AI that respond to our speech, behaviour and even mood, are we truly users in that scenario? Or are we beneficiaries of technology acting independently on our behalf — circumventing design principles?

As you can see, the further into the realm of science fiction we look the more vague and existential it gets.

So let’s wrap up.

Summary

- Emerging technologies such as AI and XR are reshaping our world.

- But Don Norman’s fundamental principles of design are still relevant, because usability is about humans, not technology — and we don’t change very quickly even though technology does.

- Products and systems will continue to be usable if they have good discoverability underpinned by affordances, signifiers, feedback, mappings and constraints, within the context of a conceptual model.

- While the roles, tools and skillsets of UX designers will undoubtedly change, there’ll always be a need for UX design — because we’ll always need to ensure new products and experiences follow design principles (which are about people, not the products themselves).

- There are some conceivable scenarios that stretch design principles to breaking point — but most of them are still fairly futuristic and science-fiction-y (for now).

Sources

Dix, A. (2017) Human–computer interaction, foundations and new paradigms, Journal of Visual Languages & Computing, 42, pp. 122–134. Available at: https://doi.org/10.1016/j.jvlc.2016.04.001.

Kumari, A., and Edla, D.R. (2023) A study on brain–computer interface: methods and applications, SN Computer Science, 4. Available at: https://doi.org/10.1007/s42979-022-01515-0.

Norman, D. (2013) The design of everyday things: revised and expanded edition. New York: Basic Books.

Statista (2023) AR & VR — worldwide. Available at: https://www.statista.com/outlook/amo/ar-vr/worldwide (Accessed 5 March 2024).

Statista (2023) Generative AI — worldwide. Available at: https://www.statista.com/outlook/tmo/artificial-intelligence/generative-ai/worldwide (Accessed 5 March 2024).

About the author

Andrew Tipp is a lead content designer and digital UX professional. He works in local government for Suffolk County Council, where he manages a content design team. You can follow him on Medium and connect on LinkedIn.

Why UX design principles will forever be relevant was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

Leave a Reply