Consequences of AI that we don’t truly understand (yet)

Welcome to the Novacene. James Lovelock argues that the Anthropocene, the age in which humans acquired planetary-scale technologies, is ending after 300 years. A new era, the Novacene, has already begun, where new beings will emerge from existing artificial intelligence systems.

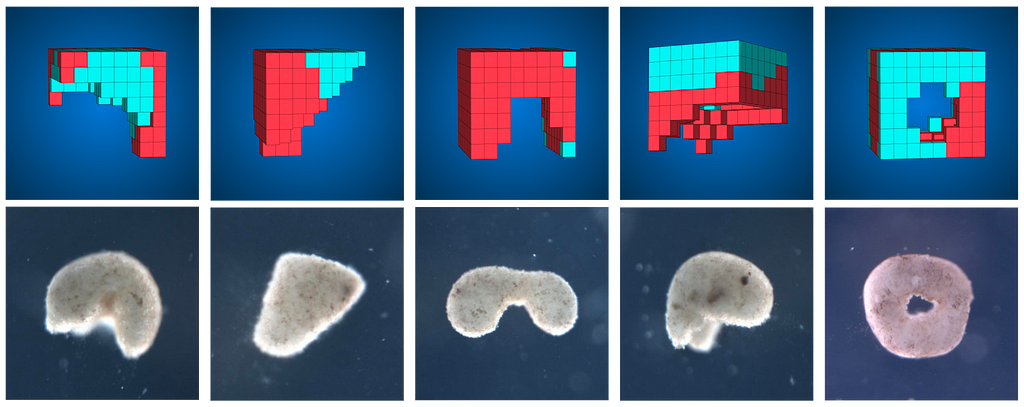

In 2020, the world’s first organism designed by an AI was born. Xenobots are an example of artificial life made when AI crossed biology. Here, an AI first creates blueprints for the organism, which then is recreated by humans using a frog’s stem cells. They are the first living creatures whose immediate evolution occurred inside a computer and not in the biosphere.

Artificial Intelligence, now even capable of designing and generating life, is steering us into the future. With its exponential growth, it is challenging to keep up and anticipate how it can affect us. Black Swans are examples of consequences that will pave the road in our journey to the future.

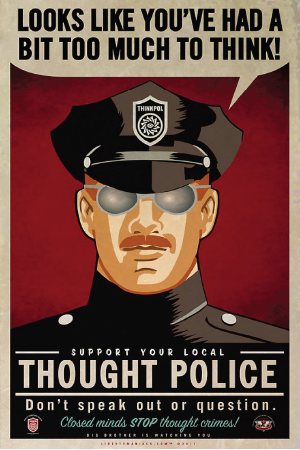

AI can read our thoughts now

How far away are we from thought crimes?

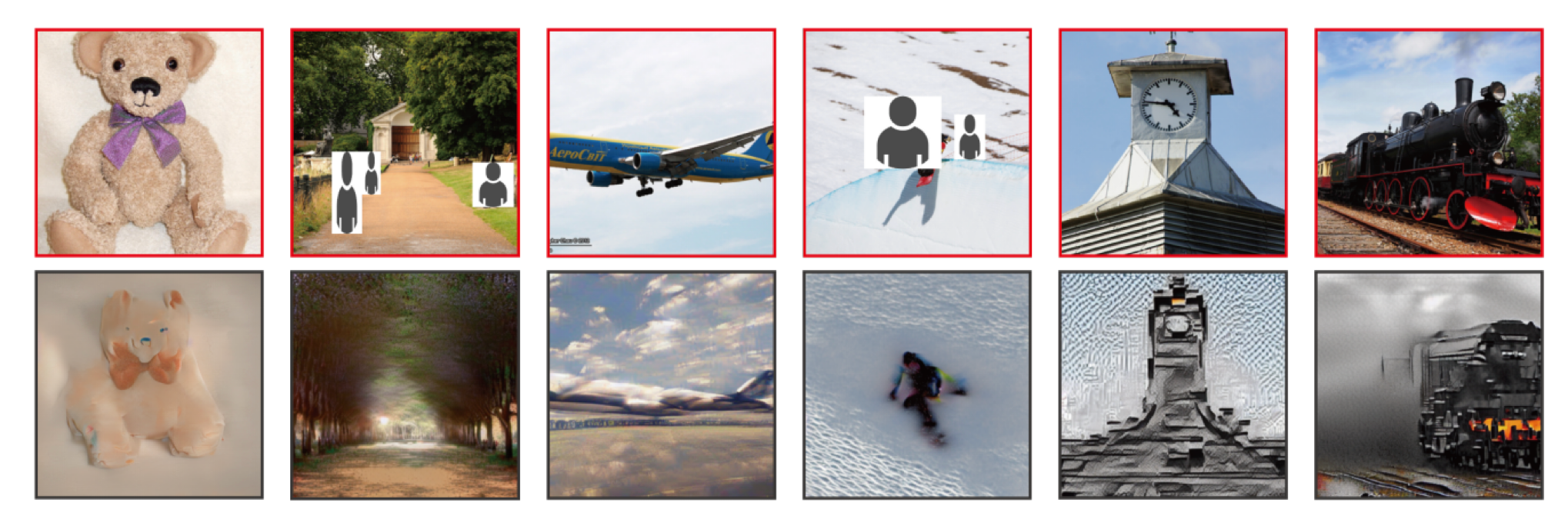

In Dec 2022, a group of researchers proved that they could use AI on MRI scans of our brains to reveal what we are thinking. In the experiment, they showed a series of images to people while scanning their brains, and soon after, they were able to reconstruct the same images using the MRI scans and Stable Diffusion. The researchers had been working on this project for over a decade; however, the breakthrough happened only recently with this new generation of diffusion models. The question now is where this will lead to, an Orwellian future where our thoughts are monitored, or will we be able to regulate the use of such tech before it gets there?

Religious AI

How far away are we from an AI cult leader?

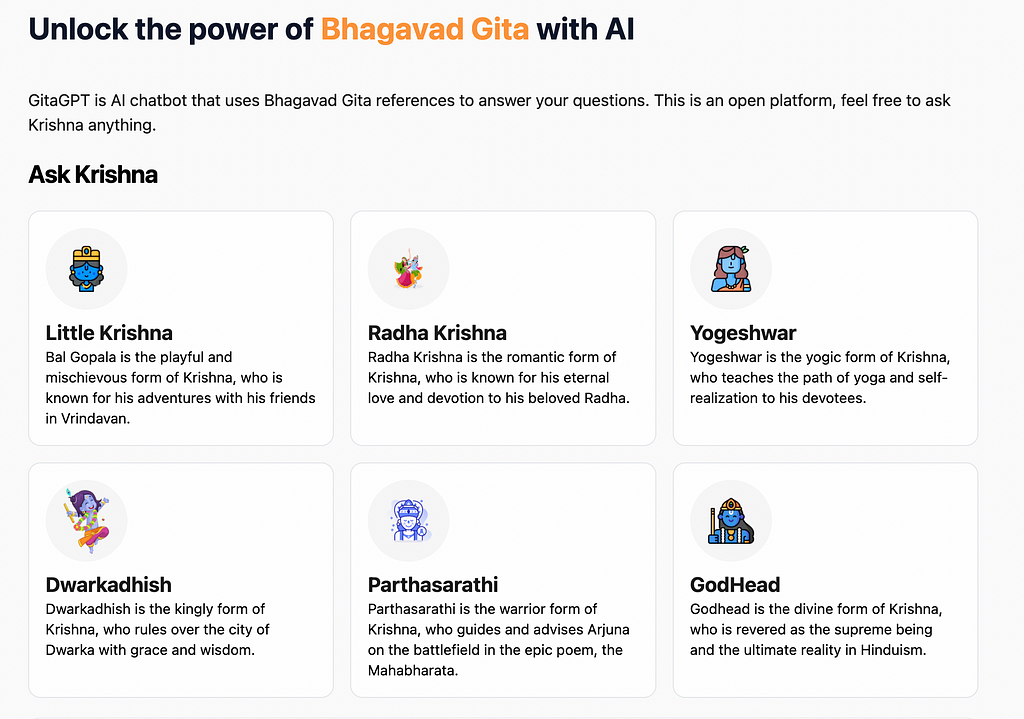

With the AI renaissance, domains previously shy of technology have also started embracing it. People are now training AI on different religious scripts. GPT trained on the Bhagavad Gita lets you ask the AI for solutions to any problem you face. You can then choose to get answers from your favorite deity: Krishna, Yogeshwar, Parthasarathi, etc. BibleGPT is another example that lets you learn more about the meaning of life through quotes from the Bible.

AI companions

Should AI companies be in control of your romantic relationships?

The platform Replika.ai offers AI companions that you can have intimate conversations with. The story of the Japanese man who married a hologram is another example of a future where AI can provide companionship. But what happens when the human companion does not fully control the AI system? Instead, the parent AI company can change their AI companions over a software update or, even worse, choose to shut down the program entirely. Users are at the mercy of these companies to maintain their romantic relationships. As is the case of the Japanese man who can no longer talk to his hologram wife or how Replika recently enraged their customers when they rescinded the feature for erotic role-play with the AI. Users witnessed their AI partners change into new personalities after this update.

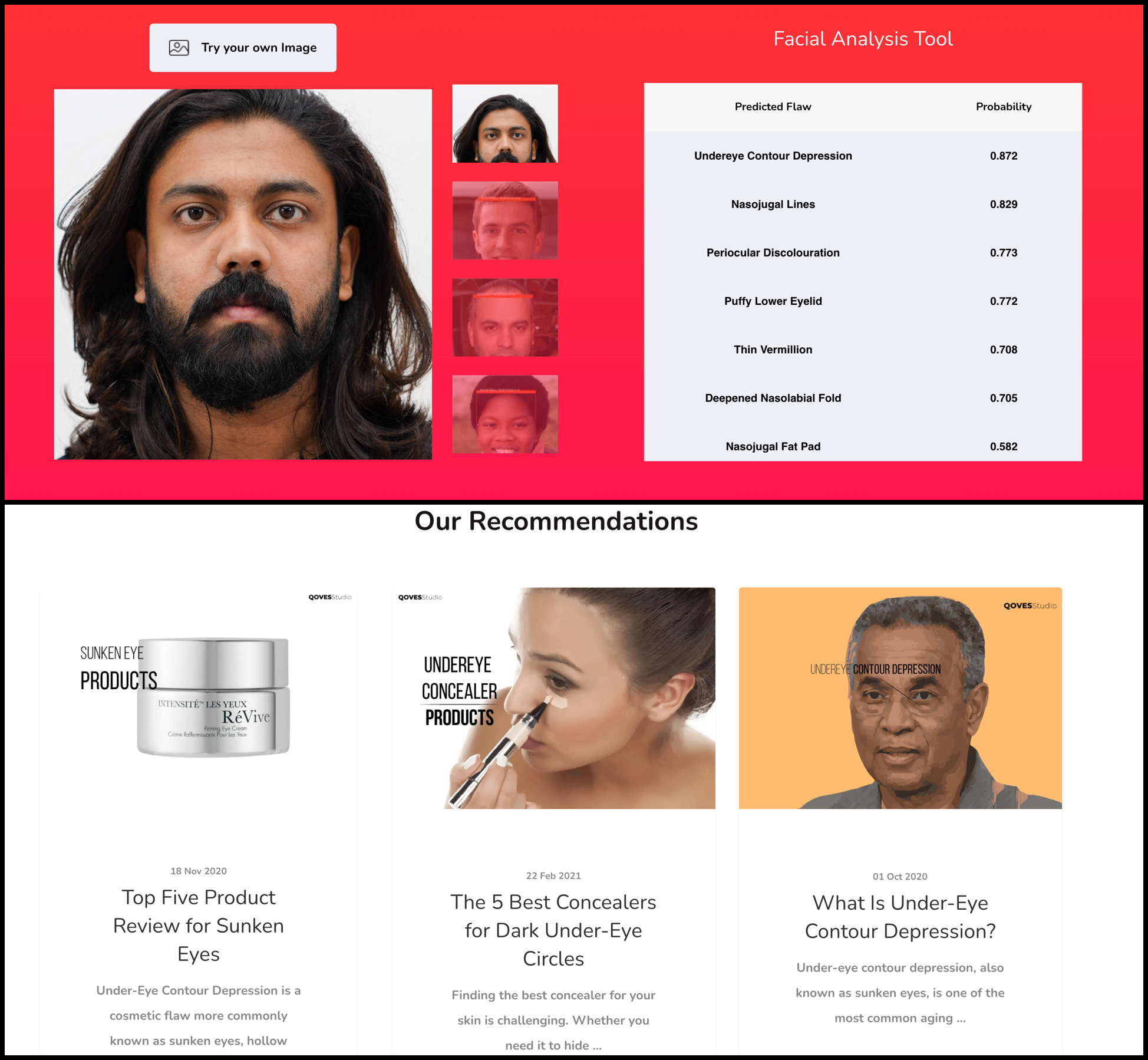

Capitalizing our insecurities

AI is calling out our imperfections

Qoves is a facial analysis tool powered by AI that is used to detect the superficial flaws on your face and then recommend products and cosmetics that can be used to fix them. They even have a YouTube channel where they analyze the faces of celebrities to understand the “science” behind what makes them “hot.” This is a prime example of how companies can use AI to use our insecurities to make a profit.

Supercharged plagiarism

Steal like an a̶r̶t̶i̶s̶t̶ AI

AI-generated art is blurring the lines of content ownership. In 2022, a Genshin Impact fan artist was doing a live painting session on Twitch. Before they could finish the fanart and post it to Twitter, one of their viewers fed the work-in-progress into an AI generator and “completed” it first. After the artist posted their completed art, the art thief proceeded to demand credit from the original artist.

Genel Jumalon ✈️ Planet ComiCon on Twitter: "During a Twitch stream AT (@haruno_intro) had their art stolen.The thief then finished the sketch by using NovelAI and posted on their Twitter before AT finish it.Then had the AUDACITY to demand a "proper reference" from them. pic.twitter.com/Twv7oWSMaW / Twitter"

During a Twitch stream AT (@haruno_intro) had their art stolen.The thief then finished the sketch by using NovelAI and posted on their Twitter before AT finish it.Then had the AUDACITY to demand a "proper reference" from them. pic.twitter.com/Twv7oWSMaW

AI-powered plagiarism is a heated topic in the context of schools; people on both sides argue how generative tools like GPT should be banned in schools but also others who think they should teach with it. The latter argues that this will expose the students to tools they might end up using anyway in the future. The analogy of how we allowed calculators in a classroom is something that comes up often as well. The college essay is dead by Stephen Marche captures this topic wonderfully.

Ownership in the age of AI

Who owns the content generated by AI?

Lauryn Ipsum on Twitter: "I'm cropping these for privacy reasons/because I'm not trying to call out any one individual. These are all Lensa portraits where the mangled remains of an artist's signature is still visible. That's the remains of the signature of one of the multiple artists it stole from.A 🧵 https://t.co/0lS4WHmQfW pic.twitter.com/7GfDXZ22s1 / Twitter"

I'm cropping these for privacy reasons/because I'm not trying to call out any one individual. These are all Lensa portraits where the mangled remains of an artist's signature is still visible. That's the remains of the signature of one of the multiple artists it stole from.A 🧵 https://t.co/0lS4WHmQfW pic.twitter.com/7GfDXZ22s1

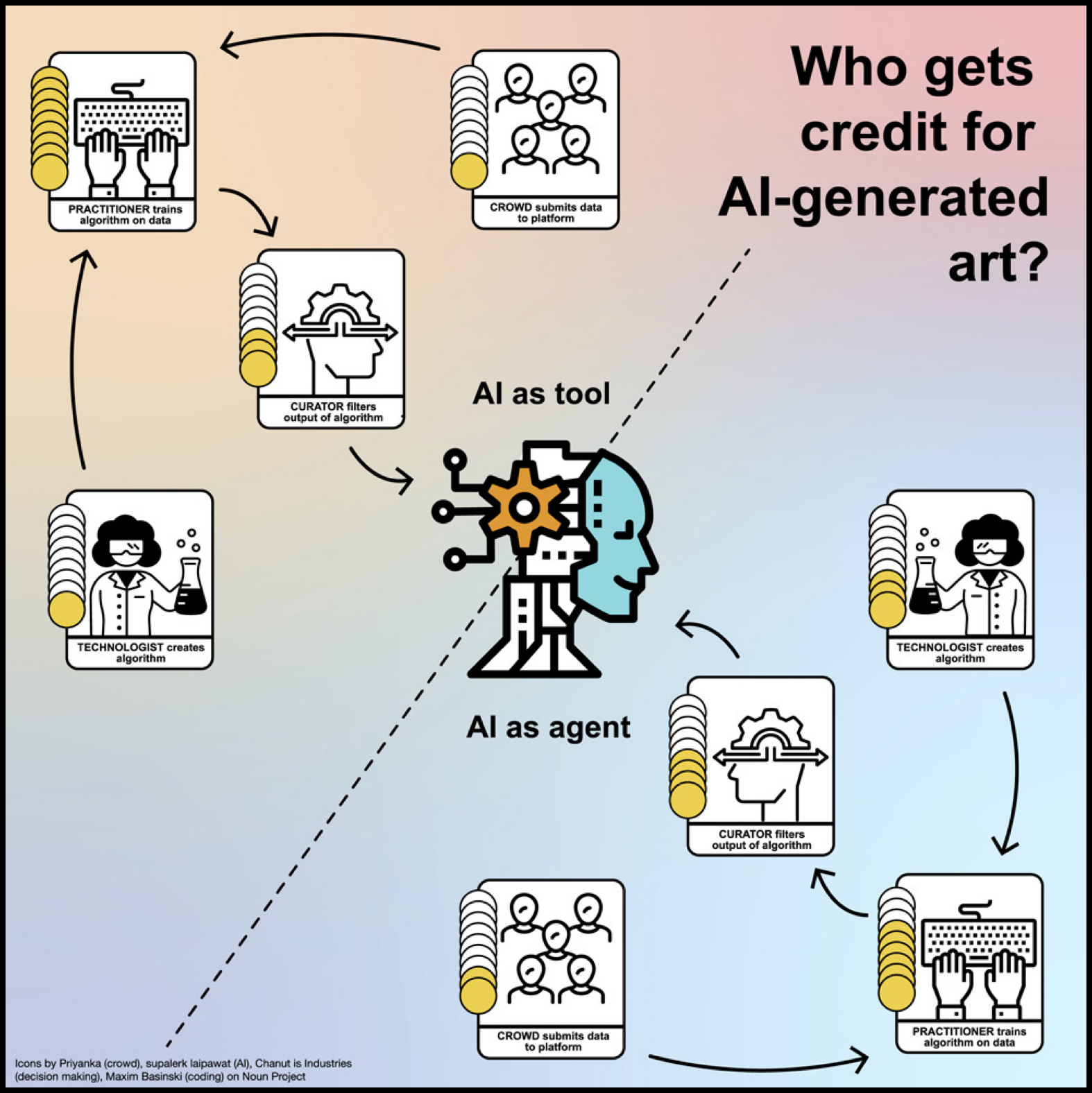

The work of artists is being used to train AI and generate new content in their style. Some even display remnants of their signature in it; people call this an industrial-scale intellectual property theft.

As the graphic above shows, there are multiple stakeholders in the generative AI ecosystem: individual data owners, people who put together the database, the developer who made the training algorithm, the artist/technologist implementing that algorithm, and the curator/artist filtering the output. So the question is, who gets paid? Below are a series of lawsuits being filed by artists and others on this topic, struggling to find the answer to this question:

- The Stable Diffusion litigation

- Ownership lawsuit by Concept Art Association

- Getty Images vs. Stability AI lawsuit

Lora Zombie on Twitter: "Ai art situation in a nutshell pic.twitter.com/2ZIiuSsjx7 / Twitter"

Ai art situation in a nutshell pic.twitter.com/2ZIiuSsjx7

AI colonialism

AI is white-washing the cultures of the world

Since all these AI models are primarily made in western countries by western researchers, they over-index on their own cultures, traditions and values in the data used to train them. However, the data that informs the cultures of the rest of the world, around 95% of the population, do not make it into the training set. They are intentionally/unintentionally ignored. When such models get deployed on globally used tools like search engines and social media platforms, the rest of the world, especially developing nations, has no choice but to adopt western cultural norms to use their technology. A new form of colonialism is born where entire cultures can be erased, called AI colonialism. Karen Hao has been reporting on this topic through multiple examples at MIT Technology Review:

- PART I: RACIAL CONTROL

South Africa’s private surveillance machine is fueling a digital apartheid - PART II: EXPLOITATION

How the AI Industry profits from catastrophe - PART III: RESISTANCE

The gig workers fighting back against the algorithms - PART IV: LIBERATION

A new vision of artificial intelligence for the people

In this new age of the Novacene, Artificial Intelligence models are becoming active ingredients in building our society. Just like elements in chemistry, they are being combined and configured endlessly for fresh purposes, whether for finding your partner, teaching your kids or designing new life; they are constantly being engrained into every aspect of our lives. Before these technologies get more than a few layers deep and become invisible in the hierarchy, we need to program human values into their foundational layers. Only then will we be able to neutralize its capability to do harm and build safe and trustworthy systems on top of it.

For all of human existence, we have been at home in nature — we trust nature, not technology. And yet we look to technology to take care of our future — we hope in technology. So we hope in something we do not quite trust. There is an irony here. Technology, as I have said, is the programming of nature, the orchestration and use of nature’s phenomena. So in its deepest essence, it is natural, profoundly natural. But it does not feel natural.

— W.Brian Arthur, The Nature of Technology

*For further resources on this topic and others, check out this handbook on AI’s unintended consequences. This article is Chapter 4 of a four-part series that surveys the unintended consequences of AI.

AI’s black swans: Unforeseen consequences looming was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

Leave a Reply